Daemons and sockets

Containerization has become an unconditional industry standard making our applications easier to configure and deploy. Docker as the most popular software, became a synonym of container despite options such as podman. But what If you are looking to start a container inside another one? Is it a good option to choose, and what if you don't have other ways at all?

In this article, I am going to look at the way we process sessions locally. Running sessions like that makes development more straightforward than going through computing API like in production. We can monitor the processes and files a worker creates without having to connect through SSH to the cloud. Also running a container with 'tail -f /dev/null' allows us to take a closer look at stdout and stderr without having to check logs which improves debugging way more. Moreover, we get some killer features of some IDEs, such as the PyCharm container debugger. But its formula can be described just in one sentence.

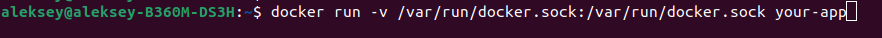

We install docker inside a container with the main web app and give it an access to the domain socket, which is listened by the docker daemon by default. Run by docker cli with mounted socket:

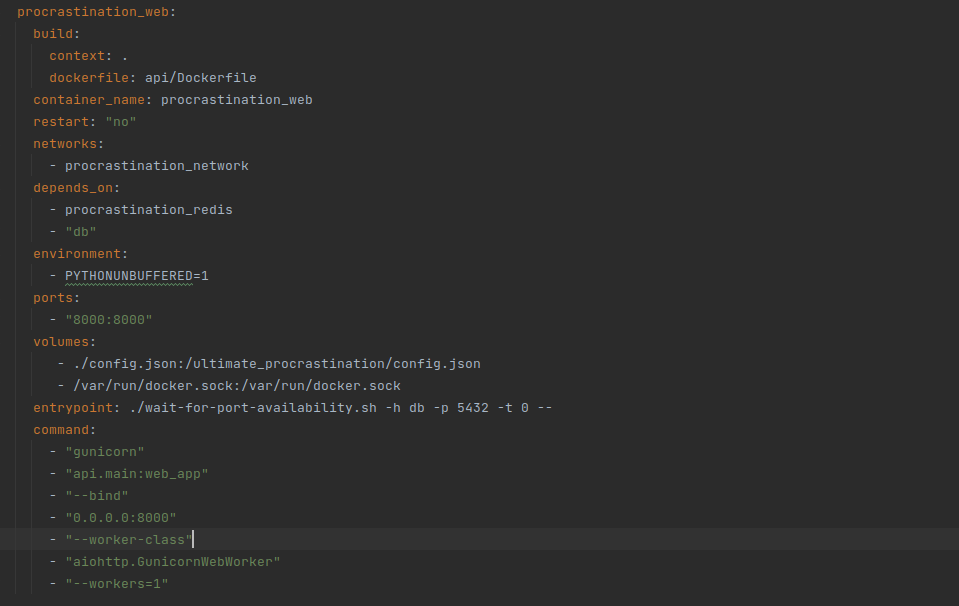

And in docker-compose.yaml:

Let's take a deeper look at the constituents of this formula daemon and socket.

Let’s start with daemons. Where did this name come from?

In the Unix System Administration Handbook, Evi Nemeth says the following about daemons:

Many people equate the word "daemon" with the word "demon," implying some kind of satanic connection between UNIX and the underworld. This is an egregious misunderstanding. "Daemon" is actually a much older form of "demon"; daemons have no particular bias towards good or evil but rather serve to help define a person's character or personality. The ancient Greeks' concept of a "personal daemon" was like the modern idea of a "guardian angel"—eudaemonia is the state of being helped or protected by a kindly spirit. As a rule, UNIX systems seem infested with both daemons and demons.

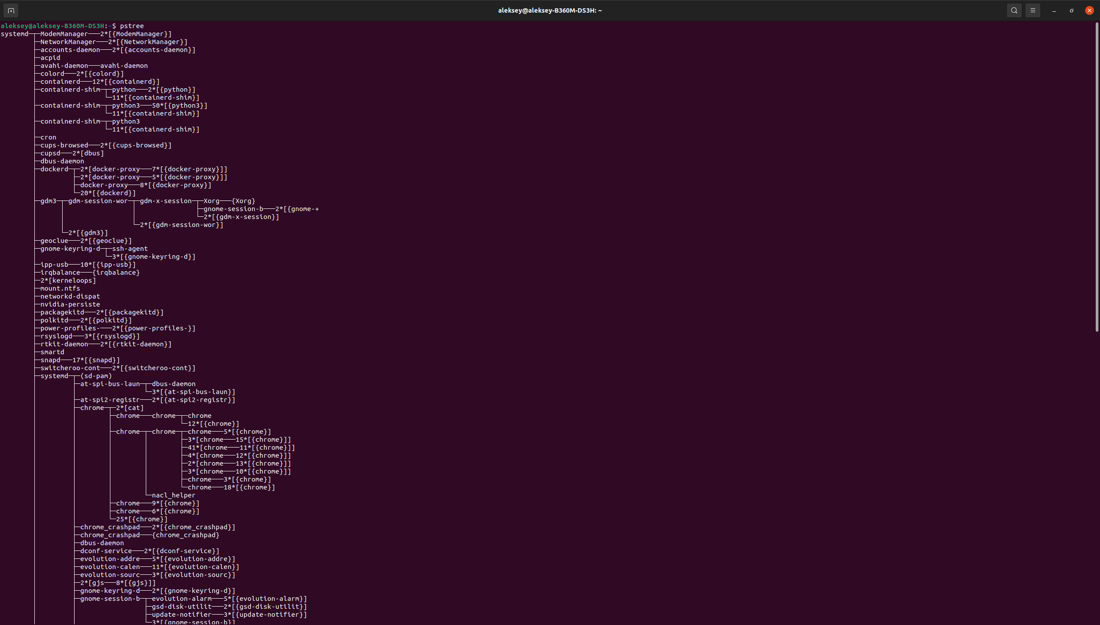

Daemons are usually the utility programs that observe operations in the background to wait for the status, serve specific subsystems, and determine the rules for operation of the entire system. For example, a daemon is configured to monitor the state of the print service; the network daemon manages network connections and monitors their status, or a docker daemon operates containers and images on your OS. By the way, looking ahead, dockerd design is a problem to run container inside a container. You can have a look at currently running daemons by your terminal using pstree, top, or htop in your terminal. Usually, their name ends with a "d" letter.

What about domain sockets which we used to communicate with dockerd? A Unix domain socket, aka UDS or IPC socket (inter-process communication socket), is a data communications endpoint for exchanging data between processes executed in the same host operating system. It is also referred to by its address family AF_UNIX. Valid socket types in the UNIX domain are:

SOCK_STREAM (compare to TCP) – for a stream-oriented socket.

SOCK_DGRAM (compare to UDP) – for a datagram-oriented socket that preserves message boundaries (as on most UNIX implementations, UNIX domain datagram sockets are always reliable and don't reorder datagrams).

SOCK_SEQPACKET (compare to SCTP) – for a sequenced-packet socket that is connection-oriented, preserves message boundaries and delivers messages in the order that they were sent.

The Unix domain socket facility is a standard component of POSIX operating systems.

The API for Unix domain sockets is similar to that of an Internet socket, but rather than using an underlying network protocol, all communication occurs entirely within the operating system kernel. Unix domain sockets may use the file system as their address namespace. (Some operating systems, like Linux, offer additional namespaces). Processes reference Unix domain sockets as file system anodes, so two processes can communicate by opening the same socket.

Now let's get back to the point. What if you don't have an option to mount access to a socket as your cloud provider rejects using a container this way? So you have to run it inside another container, but it comes with the new issues described by Jérôme Petazzoni, the creator of this option, but it is not preferred. If you want to find out the way, you should definitely have a look at his post. The main two reasons not to do that:

- It does not cooperate well with Linux Security Modules (LSM);

- It creates a mismatch in file systems that creates problems for the containers created inside parent containers.

In summary, containerization gives us a fantastic opportunity to unify all processes across all Linux systems and perform better debugging using mounting to take a closer look at output and input files such as streams or yaml describing test pipeline. Nevertheless, sometimes it brings us some problems connected with different versions of docker on host machines which is not common at all for running in the cloud or the way containers run inside each other.

This article was not planned to be as you see it. But I hope you enjoyed this small note and learned something new from it!