Review of the AV2 development process

First look at the AV2 development process

On 28 March 2018, after several years of development, the AV1 video codec standard has been released by the Alliance for Open Media (AOM), a consortium founded by several technology companies to create novel royalty-free video coding technology for the internet.

While the adoption of the standard is still on the way – there are already several software implementations that show real-time performance, and hardware codecs are rolling out soon, exploration of the successor codec technology AV2 has already begun. The H.266 standard, a successor to H.265, was completed, and to be competitive, there is obviously a need to start working on another successor. In a comparison report[11] between VVC, EVC and AV1 relative to HEVC VVC provides the best PSNR measures of compression efficiency significantly outperforming AV1: 42% for VVC vs only 18% for AV1.

Current research is underway at the exploration stage, intending to prove that there are still ways for better compression and visual quality. Several research anchors which contain new coding tools beyond the AV1 specification could be found in google libaom repository - research v1.0.0, research v1.0.1, and the latest research v2.0.0. These tools are at the early experimental stage and could be altered significantly in their final form or be discarded altogether.

In the article, we will try to evaluate the research anchor branch and find out if there is any quality improvement.

This paper is arranged as follows. First, in Section 1 brief description of the current experiments is provided. Section 2 contains a description of the test methodology, and the selected evaluation setup is described, while section 3 contains the test execution process using the tools developed by ViCueSoft. And finally, the experimental results are presented in Section 4, followed by the conclusion.

NEW CODING TOOLS INFORMATION

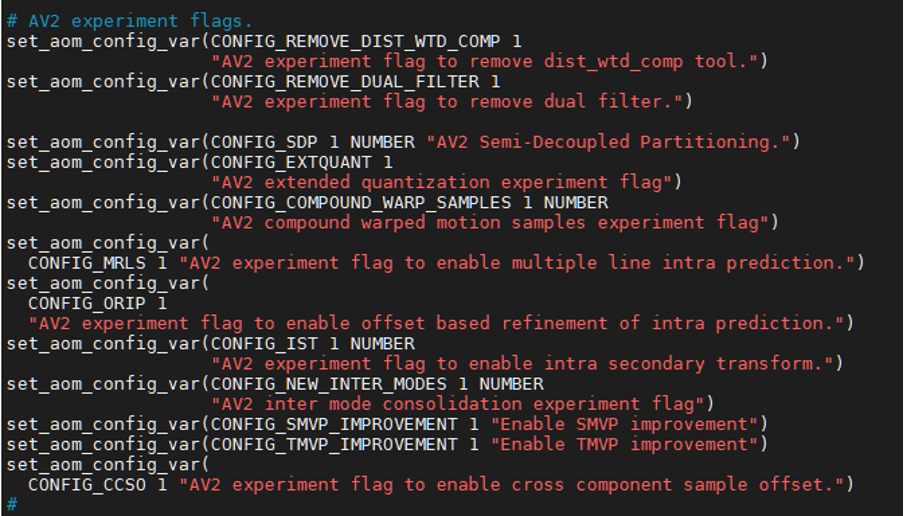

Experiments could be configured by editing build/cmake/aom_config_defaults.cmake file in “# AV2 experiment flags” section; some features look very VVC inspired.

- DIST_WTD_COMP and DUAL_FILTER – these AV1 coding tools were removed from AV2.

- SDP - Semi-Decoupled Partitioning – is a new coding block partitioning method. Luma and Chroma share the same partitioning but only until specified partitioning depth. After this depth, partitioning patterns of luma and chroma can be optimized and signaled independently. A similar coding tool called Dual-Tree was earlier adopted into Versatile Video Coding (VVC). In both video standards, this method is only applied to key frames.

- EXTQUANT – a new quantization design: 6 lookup tables for qstep are consolidated into a single one which also can be replaced with a clear exponential formula. Also, the max qstep value is increased to match HEVC in the minimum achievable bit rate.

- COMPOUND_WARP_SAMPLES. AV1 introduced a Warped motion mode, where model parameters are derived based on the motion vectors of neighboring blocks. These motion vectors are referred to as motion samples. AV1 considers only uni-predicted neighboring blocks which use precisely the same reference frame as the current block. This proposal for AV2 relaxes the motion sample selection strategy to consider compound predicted blocks as well.

- NEW_TX_PARTITION – in addition to 4-way recursive partitioning, introduces new patterns:

- 2-way horizontal

- 2-way vertical

- 4-way horizontal

- 4-way vertical

As in HEVC, the same flexibility is now available for Intra coded blocks. In AV1 recursive transform split was allowed for Inter coded blocks only.

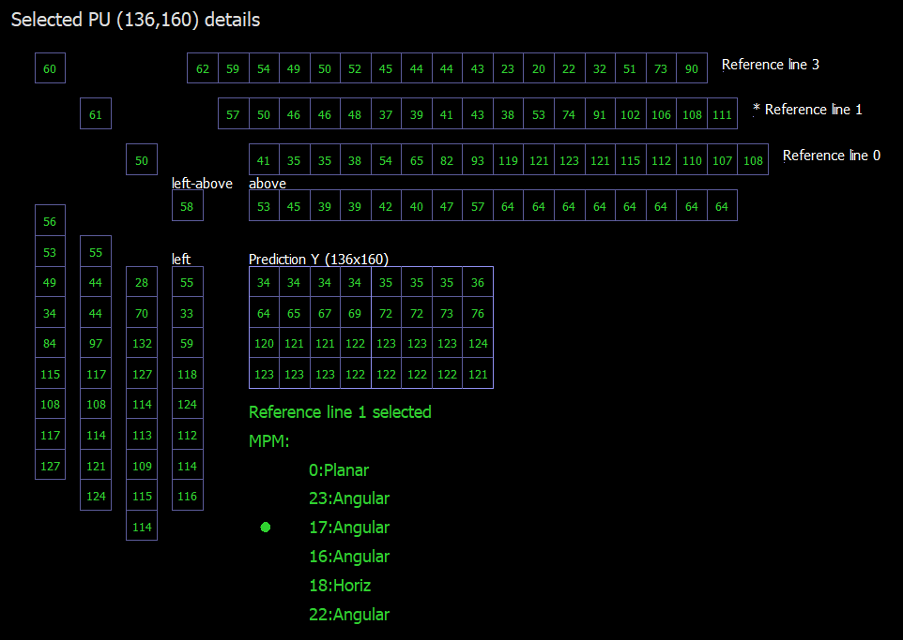

- MRLS - a Multiple Reference Line Selection for intra prediction – is a method proposed for intra coded blocks, whereas farther reference lines (up to 4) above and left to current block can be used for intra prediction. MRLS is only applied to the luma component, since chroma texture is relatively smooth. For non-directional intra prediction modes, MRLS is disabled. Reference line selection is signaled into the bitstream. Similar coding tool Multiple Reference Line (MRL) was also adopted into VVC.

- ORIP - offset-based intra prediction refinement – in this method, after generating the intra prediction samples, the prediction samples are refined by adding an offset value generated using neighboring reference samples. The refinement is performed only on several lines of the predicted block's top and left boundary to reduce the discontinuity between the reference and predicted samples.

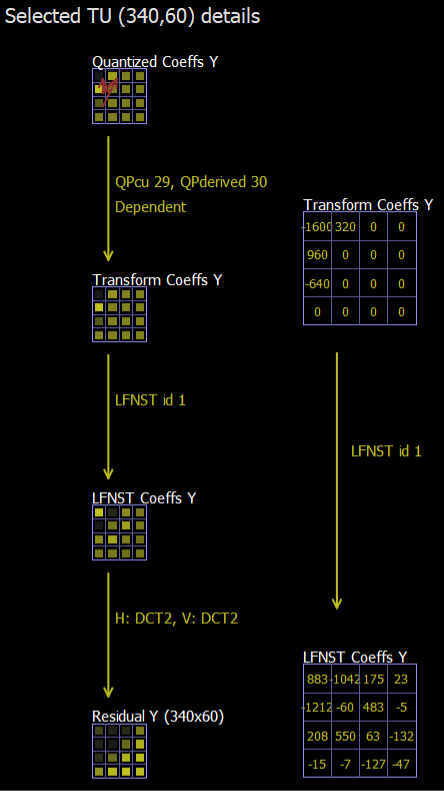

- IST - Intra Secondary Transform. In AV1, separable transforms are applied to intra & inter residual samples for energy compactification and decorrelation. While non-separable transforms like the Karhunen–Loève transform (KLT) have much better decorrelation properties, they require much bigger computational resources. The idea of IST is to apply secondary non-separable transform only on the low-frequency coefficients of primary transform – we capture more directionality but maintaining low complexity. There are 12 sets of secondary transforms, with three kernels in each set added with some restrictions based on primary transform type, block size, and it's Intra Luma only. A similar coding tool called Low-Frequency Non Separable Transform (LFNST) was earlier adopted into VVC. It is enabled for both Luma and Chroma, but only for Intra-coded blocks.

- NEW_INTER_MODE – harmonization and simplification of Inter modes and how they signaled into the bitstream. This contribution also changes the signaling of the DRL index to remove its dependency on motion vector parsing.

- SMVP & TMVP - Spatial and Temporal Motion Vector Prediction – a series of improvements in the efficiency of motion vector prediction and coding. TMVP improvements comparing to AV1:

- Blocks coded using a single prediction with backward reference mode can be used for generating TMVP motion field for future frames.

- Reference frames scanning order for generating TMVP was re-designed to consider the temporal distance between reference and current frame. SMVP improvements comparing to AV1:

- Added temporal scaling for motion vectors of spatially neighboring blocks if they use a different reference frame than the current block.

- A compound SMVP now can be composed of 2 difference neighboring blocks.

- Reduced number of line buffers required for spatial neighbors scanning to simplify hardware implementations with minor effect on compression efficiency. Motion vector prediction still requires three 8x8 units’ columns from the left and only one 8x8 unit row from above (three as in AV1).

- CCSO – Cross Component Sample Offset – Uses Luma channel after deblocking but before CDEF to compute an offset value. The computation involves simple filtering of Luma samples and a small lookup table. This offset value is applied on the Chroma channel after CDEF but before Loop Restoration. Several bytes are signaled at frame level to control offset computation: filter parameters, quantization step, a lookup table. At the CTU level, only one bit per 128x128 Chroma samples is signaled.

TEST METHODOLOGY AND EVALUATION SETUP

First, we need to set up the common test conditions (CTC) – a well-defined environment and aligned test coding parameters between various encoder implementations to perform an adequate comparison. We will use the AOM CTC document with an extensive description of the best video codec performance evaluation practices to not invent the wheel.

While subjective video quality assessment is preferable, as its measurements directly anticipate the reactions of those who can view the systems being tested, it's a very complex and time-consuming process, so a more common approach is using objective quality metrics. While it's just an approximation of the ground truth, it renders a uniform way to compare various encoders’ implementations against each other and helps to focus on creating new and better coding tools.

To prepare for our test, we need to:

- Select source video streams.

- Select quality metrics.

- Prepare a codec implementation model and configure its settings.

1. Source video streams selection.

Streams for CTC could be obtained here. Each video is segmented into one or another class. Those classes represent various scenarios for the codec: natural or synthetic video, screen content; high motion and static scenes; high detailed complex video, and simple plain videos.

Our article will use only SDR natural (A*) and synthetic (B1) classes of video streams. You could also find HDR and still images data sets there.

| class | description |

|---|---|

| A1 | 4:2:0, 4K, 10 bit |

| A2 | 4:2:0, 1920x1080p, 8 and 10 bit |

| A3 | 4:2:0, 1280x720p |

| A4 | 4:2:0, 640x360p, 8 bit |

| A5 | 480x270p, 4:2:0 |

| B1 | Class B1, 4:2:0, various resolutions |

2. Quality metrics selection

We will use the following widespread objective full-reference video quality metrics: PSNR, SSIM, VMAF. Metrics implementations are provided by Netflix-developed libvmaf tool, integrated into ViCueSoft video quality analysis tool VQProbe.

3. Codec model and configuration

We will set AV1 model implementation as reference and compare it against AV2 research anchor branch. Used hash commits are provided below:

| Implementation name | Implementation hash commit |

|---|---|

| libaom av1 master | 48cd0c756fe7e3ffbff4f0ffc38b0ab449f44907 |

| libaom av2 research branch | a1d994fb8daaf5cc9e546d668f0359809fc4aea1 |

Modern encoders have dozens of various settings, but in the real world, codecs are usually operated in several distinguished operational points, aka presets, which restricts possible settings configurations. In the article, we will evaluate the following scenarios:

- All Intra (AI) – evaluation of only intra coding tools. Usual scenarios are still picture images and intermediate video storage in video editing software.

- Random Access (RA) – inter coding tools and frames reordering are evaluated with intra-coding tools. The usual format for video-on-demand and video store.

There are other scenarios that should be considered for a comprehensive evaluation, like live video streaming (usually called Low Delay), but they are outside the scope of this article. Regarding other settings:

- All encoding is done in fixed QP configuration mode; QP parameters are provided below. To get comparable results, we will use an approach called the Bjøntegaard metrics (see ITU VCEG-M33 and VCEG-L38). We need several operation points or quantization parameters to get several encoded streams and use them to plot the parameterized rate-distortion curve.

- The best quality mode – “--CPU-used=0” will be evaluated.

- For A1 class tiling and threads are allowed – “--tile-columns=1 --threads=2 --row-mt=0”

- All other settings are provided below.

- All encoding is done in fixed QP configuration mode; QP parameters are provided below. To get comparable results, we will use an approach called the Bjøntegaard metrics (see ITU VCEG-M33 and VCEG-L38). We need several operation points or quantization parameters to get several encoded streams and use them to plot the parameterized rate-distortion curve.

- The best quality mode – “--CPU-used=0” will be evaluated.

- For A1 class tiling and threads are allowed – “--tile-columns=1 --threads=2 --row-mt=0”

- All other settings are provided below.

| All Intra | ||

|---|---|---|

| QP | --cq-level | 15, 23, 31, 39, 47, 55 |

| Fixed parameters | --cpu-used=0 --passes=1 --end-usage=q --kf-min-dist=0 --kf-max-dist=0 --use-fixed-qp-offsets=1 --deltaq-mode=0 --enable-tpl-model=0 --enable-keyframe-filtering=0 –obu –limit=30 | |

| Random Access | ||

| QP | --cq-level | 23, 31, 39, 47, 55, 63 |

| Fixed parameters | --cpu-used=0 --passes=1 --lag-in-frames=19 --auto-alt-ref=1 --min-gf-interval=16 --max-gf-interval=16 --gf-min-pyr-height=4 --gf-max-pyr-height=4 --limit=130 --kf-min-dist=65 --kf-max-dist=65 --use-fixed-qp-offsets=1 --deltaq-mode=0 --enable-tpl-model=0 --end-usage=q --enable-keyframe-filtering=0 --obu | |

EXECUTION OF CODEC EVALUATION

This section describes actual steps done for evaluation. It describes the environment and configuration of all tools.

System configuration

- Ubuntu 21.04

- GCC 10.3.0

- AMD Ryzen Threadripper 3960X

- DDR4 128Gb@ 2667 MHz

Codec configuration

Encoder preparation is pretty simple: for AV1, we checkout the "master" branch, and for AV2 research – research2 branch.

Building step is straightforward with CMake and make calls. See https://aomedia.googlesource.com/aom for details.

Streams configuration

Streams are in *.y4m container that is natively supported by all tools, so there is no need for any other conversions.

The test data set preparation

Having all inputs prepared, it's time to use Testbot-engine. Testbot is a video codecs' validation automation framework developed by our team at ViCueSoft. It allows us to run outer software iterating over parameters and streams, monitor its execution, and control its output. More information about Framework configuration and its possibilities is here. We need to produce N set of streams for each scenario, where N=<streams>*<qp values> using settings from the previous section.

The quality metrics data calculation

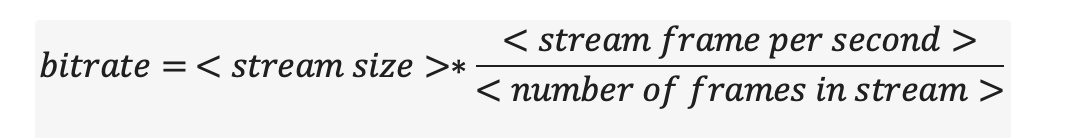

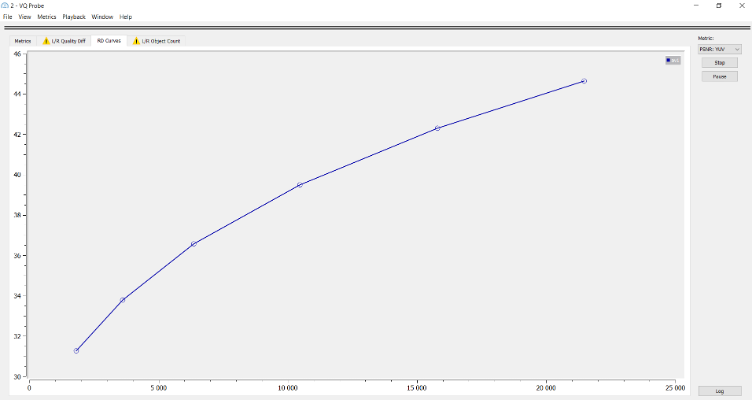

To get Bjøntegaard metrics parameterized rate-distortion curve, we need to calculate a point for a 2-D plot. We have the actual bitrate on the X-axis and on Y-axis - the distortion (aka quality metric output).

Real bitrate is calculated using the formula:

For metrics calculation, we will use VQProbe - a professional tool for video quality measurement that wraps libvmaf and thus supports the commonly used quality metrics, such as PSNR, SSIM, VMAF. You can get more information about VQProbe here.

We use console interface of VQProbe that can be seamlessly integrated into automated test pipelines. Let’s configure VQProbe:

Create a project for each stream: ./VQProbeConsole --create {:stream.name}.{:qp}.{:codec}

Add decoded streams: ./VQProbeConsole -a {dec_dir}/{:stream.name}.{:qp}.{:codec}.y4m -p {:stream.name}.{:qp}.{:codec}.vqprb

Add reference stream: ./VQProbeConsole -t ref -a {media_root}/{:stream.path} -p {:stream.name}.{:qp}.{:codec}.vqprb

Calculate quality metrics: ./VQProbeConsole -d {metrics_dir} -p {:stream.name}.{:qp}.{:codec}.vqprb

VQProbe could generate metrics in *.json (better for automation) and *.csv (more readable) formats.

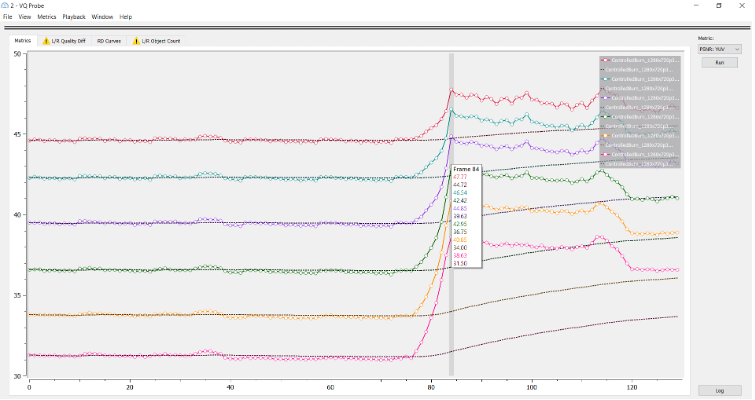

All metrics, average and per-frame could be well visualized in VQ Probe:

Metrics results interpretation

The main idea now is to estimate the area between these two curves to compute an average bit rate savings for equal measured quality - the Bjøntegaard-delta rate (BD-rate). This is done with the help of a piecewise cubic fitting of the curves, where the bit rate is measured in the log domain, and integration determines the area between the two curves.

AV2 RESEARCH EXPERIMENTAL RESULTS

Averaging BD-rate values across streams in each class, we get the following results:

Reference: AV1 CPU usage 0.

For AI scenario

| class | PSNR | SSIM | VMAF |

|---|---|---|---|

| A1 | -6.07 | -6.59 | -6.49 |

| A2 | -6.74 | -7.16 | -8.35 |

| A3 | -6.64 | -5.91 | -7.33 |

| A4 | -8.28 | -8.62 | -10.18 |

| A5 | -6.06 | -4.88 | -4.73 |

| B1 | -7.52 | -8.11 | -7.24 |

| Average | -6.88 | -6.88 | -7.39 |

For RA scenario

| class | PSNR | SSIM | VMAF |

|---|---|---|---|

| A1 | -5.27 | -5.55 | -5.97 |

| A2 | -4.22 | -4.60 | -4.17 |

| A3 | -4.7 | -5.23 | -5.29 |

| A4 | -5.08 | -7.46 | -7.66 |

| A5 | -3.73 | -4.3 | -3.5 |

| B1 | -1.12 | -2.61 | -1.69 |

| Average | -4.02 | -4.95833 | -4.71333 |

CONCLUSION

An extensive performance evaluation of the new coding tools candidates of AV2 research branch against libaom AV1 encoder is presented and discussed. Evaluation is done in single pass fixed QP mode in random access and all intra scenarios. According to the experimental results, the coding efficiency, even in such an early stage, shows solid gain against libaom AV1. Notably, for Intra tools, it offers average 7% bitrate savings in terms of VMAF metrics on a wide range of natural and synthetic video content, while in combination with inter coding tools – 4.7%. Due to limited availability of computing resources, complexity evaluation wasn’t evaluated, but rough approximation shows only 1.2x times encoding compleixrt increase and 1.4x time decoding, which shows that the researches of the new standrat am very careful with the complexity of the new features.

New coding tools lie well in the classic hybrid video compression structure, enhancing inter, intra coding, transform, post-filtering, and so on. Several intersections with VVC coding tools have already proved their efficiency and allowed to reduce the tools' investigation time.

All evaluation was possible with the great help of ViCueSoft's VQ Probe and test automation frameworks. That flexibility allows seamless integration and building of automated test pipelines for a complex video codec quality evaluation.

References

- Xin Zhao, Zhijun (Ryan) Lei, Andrey Norkin, Thomas Daede, Alexis Tourapis “AV2 Common Test Conditions v1.0”

- Alliance for Open Media, Press Release Online: <http://aomedia.org<>

- ISO/IEC 23090-3:2021 Information technology — Coded representation of immersive media — Part 3: Versatile video coding

- Peter de Riva, Jack Haughton “AV1 Bitstream & Decoding Process Specification”

- G. Bjøntegaard, “Calculation of average PSNR differences between RD-Curves,” ITU-T SG16/Q6, Doc. VCEG-M33, Austin, Apr. 2001.

- ViCueSoft blog “Objective and Subjective video quality assessment” https://vicuesoft.com/blog/titles/objective_subjective_vqa

- ViCueSoft blog “Testbot — ViCueSoft’s video codecs validation automation framework” https://vicuesoft.com/blog/titles/testbot/

- Git repositories on aomedia, Online: https://aomedia.googlesource.com/

- Test sequences: https://media.xiph.org/video/av2ctc/test_set/

- VQProbe: https://vicuesoft.com/vq-probe

- Michel Kerdranvat, Ya Chen, Rémi Jullian, Franck Galpin, Edouard François “The video codec landscape in 2020” InterDigital R&D